Test failure analysis with LLM in CI pipeline

On my current project, I'm the sole test engineer in a team with several developers. We merge pull requests only when regression tests are implemented, so I sometimes find myself under pressure to handle multiple tasks at once - especially when there are failed tests. Without my input, it can be difficult to understand what a test is doing and what exactly is causing a failure: is it a bug or does a test need to be updated?

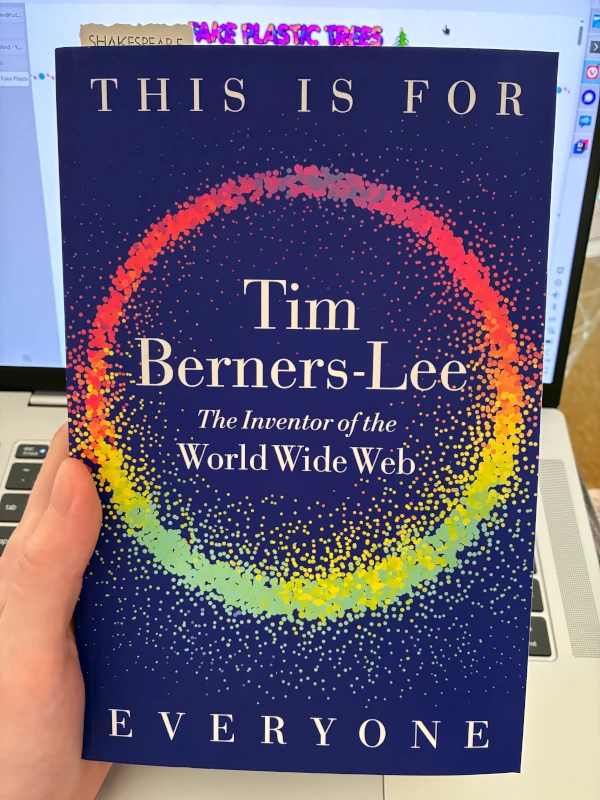

After reading many posts about AI in testing on softwaretestingweekly.com, I decided to give it a try and integrate Claude into our pipelines. The idea was to feed it test reports, grant it read-only access to the repository and the changes in a PR, and ask it to analyze everything and leave a comment with its findings.

One month later, here are my thoughts.